+

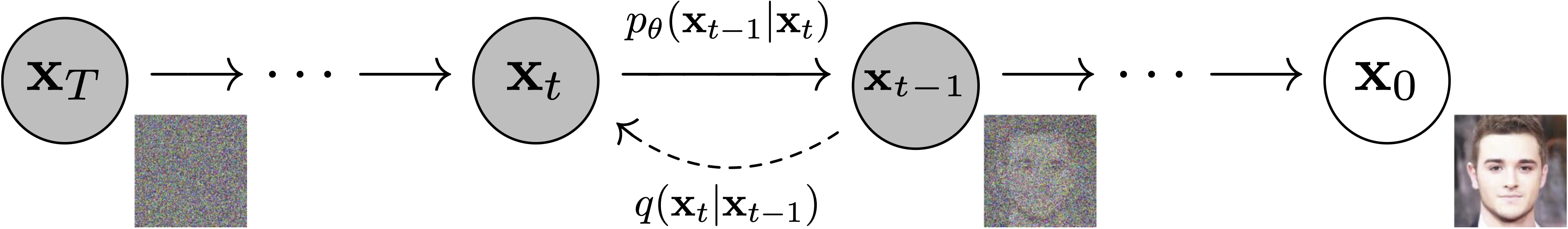

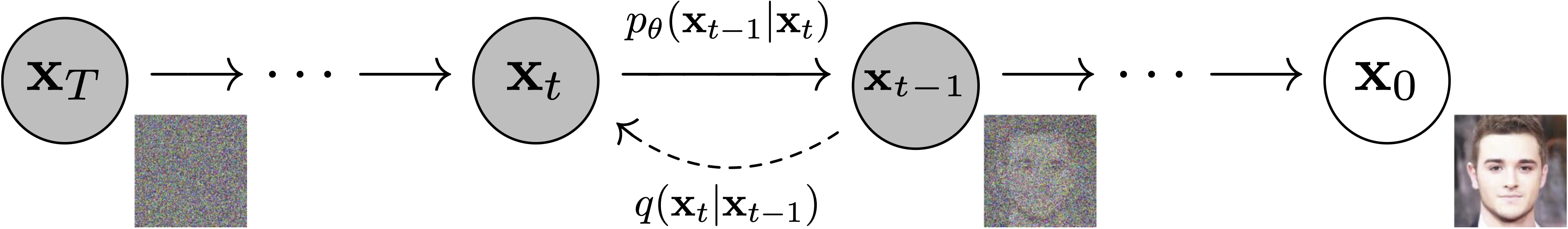

+ + Figure from DDPM paper (https://arxiv.org/abs/2006.11239). +

+  +

+

+ Figure from DDPM paper (https://arxiv.org/abs/2006.11239).

+

+ **Schedulers**: Algorithm class for both **inference** and **training**. The class provides functionality to compute previous image according to alpha, beta schedule as well as predict noise for training. *Examples*: [DDPM](https://arxiv.org/abs/2006.11239), [DDIM](https://arxiv.org/abs/2010.02502), [PNDM](https://arxiv.org/abs/2202.09778), [DEIS](https://arxiv.org/abs/2204.13902) - - +

+  +

+

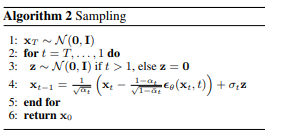

+ Sampling and training algorithms. Figure from DDPM paper (https://arxiv.org/abs/2006.11239).

+

+ **Diffusion Pipeline**: End-to-end pipeline that includes multiple diffusion models, possible text encoders, ... *Examples*: GLIDE, Latent-Diffusion, Imagen, DALL-E 2 - - +

+  +

+

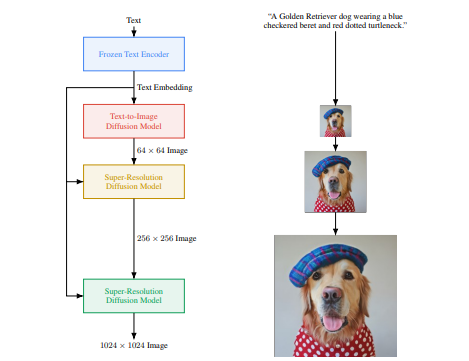

+ Figure from ImageGen (https://imagen.research.google/).

+

+ ## Philosophy - Readability and clarity is prefered over highly optimized code. A strong importance is put on providing readable, intuitive and elementary code design. *E.g.*, the provided [schedulers](https://github.com/huggingface/diffusers/tree/main/src/diffusers/schedulers) are separated from the provided [models](https://github.com/huggingface/diffusers/tree/main/src/diffusers/models) and provide well-commented code that can be read alongside the original paper.