docs: Flash Attention Conceptual Guide (#892)

PR for conceptual guide on flash attention. I will add more info unless I'm told otherwise. --------- Co-authored-by: Nicolas Patry <patry.nicolas@protonmail.com> Co-authored-by: Omar Sanseviero <osanseviero@gmail.com>

This commit is contained in:

parent

059bb5cf83

commit

f260eb72f9

|

|

@ -21,4 +21,6 @@

|

||||||

- sections:

|

- sections:

|

||||||

- local: conceptual/streaming

|

- local: conceptual/streaming

|

||||||

title: Streaming

|

title: Streaming

|

||||||

|

- local: conceptual/flash_attention

|

||||||

|

title: Flash Attention

|

||||||

title: Conceptual Guides

|

title: Conceptual Guides

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,12 @@

|

||||||

|

# Flash Attention

|

||||||

|

|

||||||

|

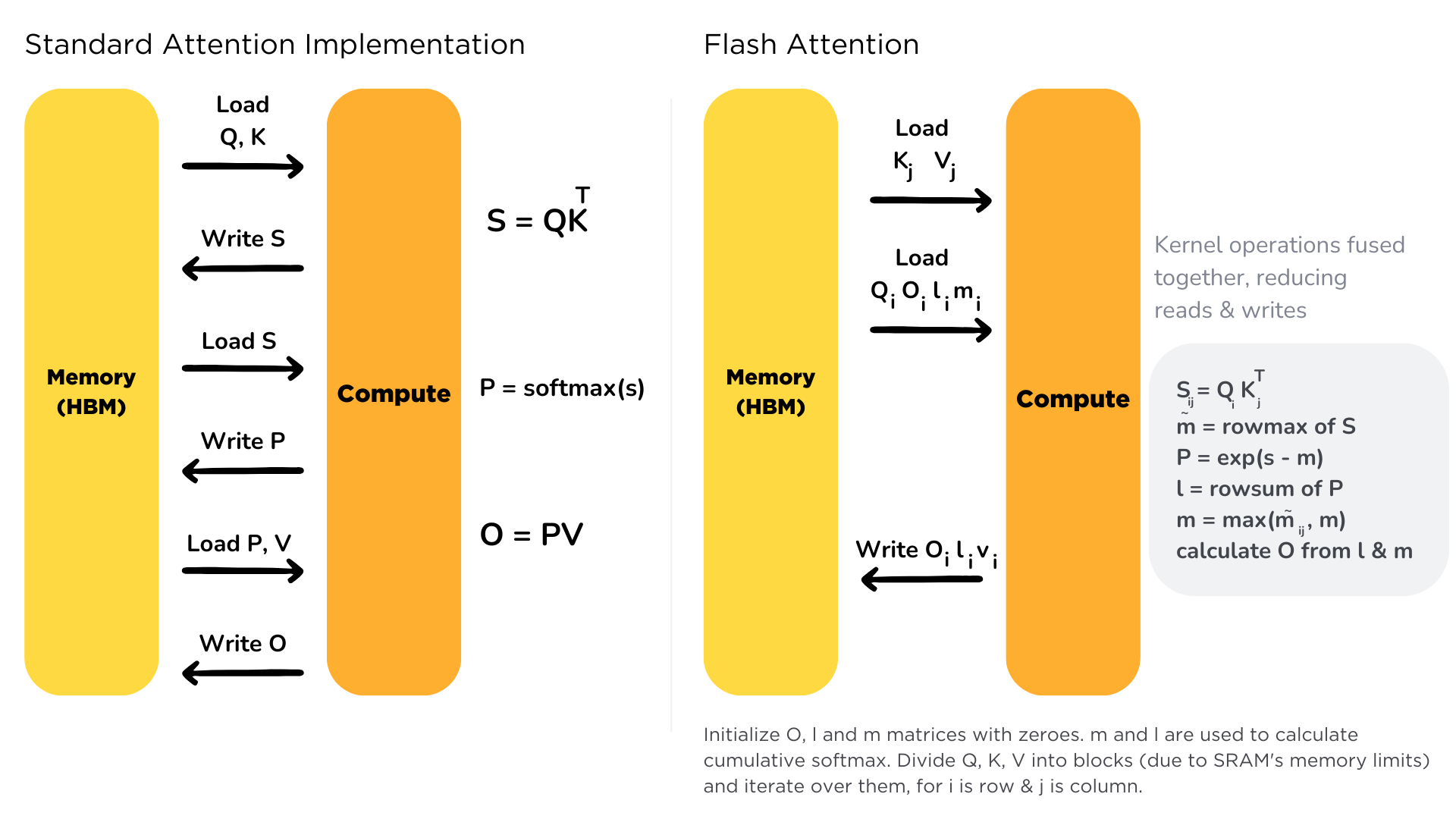

Scaling the transformer architecture is heavily bottlenecked by the self-attention mechanism, which has quadratic time and memory complexity. Recent developments in accelerator hardware mainly focus on enhancing compute capacities and not memory and transferring data between hardware. This results in attention operation having a memory bottleneck. **Flash Attention** is an attention algorithm used to reduce this problem and scale transformer-based models more efficiently, enabling faster training and inference.

|

||||||

|

|

||||||

|

Standard attention mechanism uses High Bandwidth Memory (HBM) to store, read and write keys, queries and values. HBM is large in memory, but slow in processing, meanwhile SRAM is smaller in memory, but faster in operations. In the standard attention implementation, the cost of loading and writing keys, queries, and values from HBM is high. It loads keys, queries, and values from HBM to GPU on-chip SRAM, performs a single step of the attention mechanism, writes it back to HBM, and repeats this for every single attention step. Instead, Flash Attention loads keys, queries, and values once, fuses the operations of the attention mechanism, and writes them back.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

It is implemented for supported models. You can check out the complete list of models that support Flash Attention [here](https://github.com/huggingface/text-generation-inference/tree/main/server/text_generation_server/models), for models with flash prefix.

|

||||||

|

|

||||||

|

You can learn more about Flash Attention by reading the paper in this [link](https://arxiv.org/abs/2205.14135).

|

||||||

|

|

||||||

Loading…

Reference in New Issue