Before this change, the generation input was sent to the backend as a single string, encoding images as Base64 and packing them in Markdown-style links. This change adds a new chunked input representation that separates text chunks from images chunks. Image chunks contain binary data (for smaller message sizes) and the image's MIME type. The stringly-typed inputs are still sent to support backends that do not support chunked inputs yet. |

||

|---|---|---|

| .. | ||

| src | ||

| Cargo.toml | ||

| README.md | ||

README.md

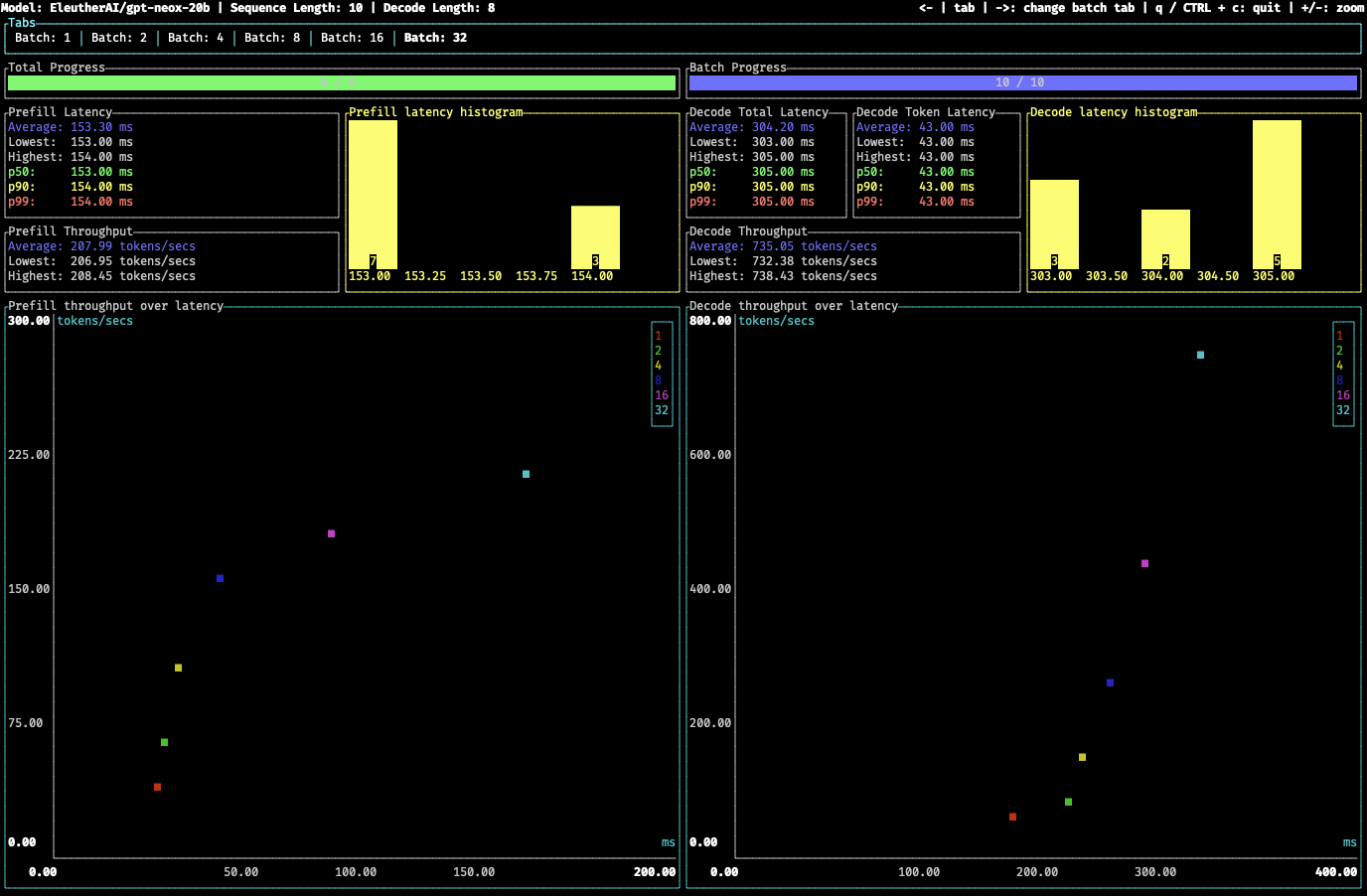

A lightweight benchmarking tool based inspired by oha and powered by tui.

Install

make install-benchmark

Run

First, start text-generation-inference:

text-generation-launcher --model-id bigscience/bloom-560m

Then run the benchmarking tool:

text-generation-benchmark --tokenizer-name bigscience/bloom-560m