|

|

||

|---|---|---|

| .github/workflows | ||

| aml | ||

| assets | ||

| docs | ||

| k6 | ||

| launcher | ||

| proto | ||

| router | ||

| server | ||

| .dockerignore | ||

| .gitignore | ||

| Cargo.lock | ||

| Cargo.toml | ||

| Dockerfile | ||

| LICENSE | ||

| Makefile | ||

| README.md | ||

| rust-toolchain.toml | ||

README.md

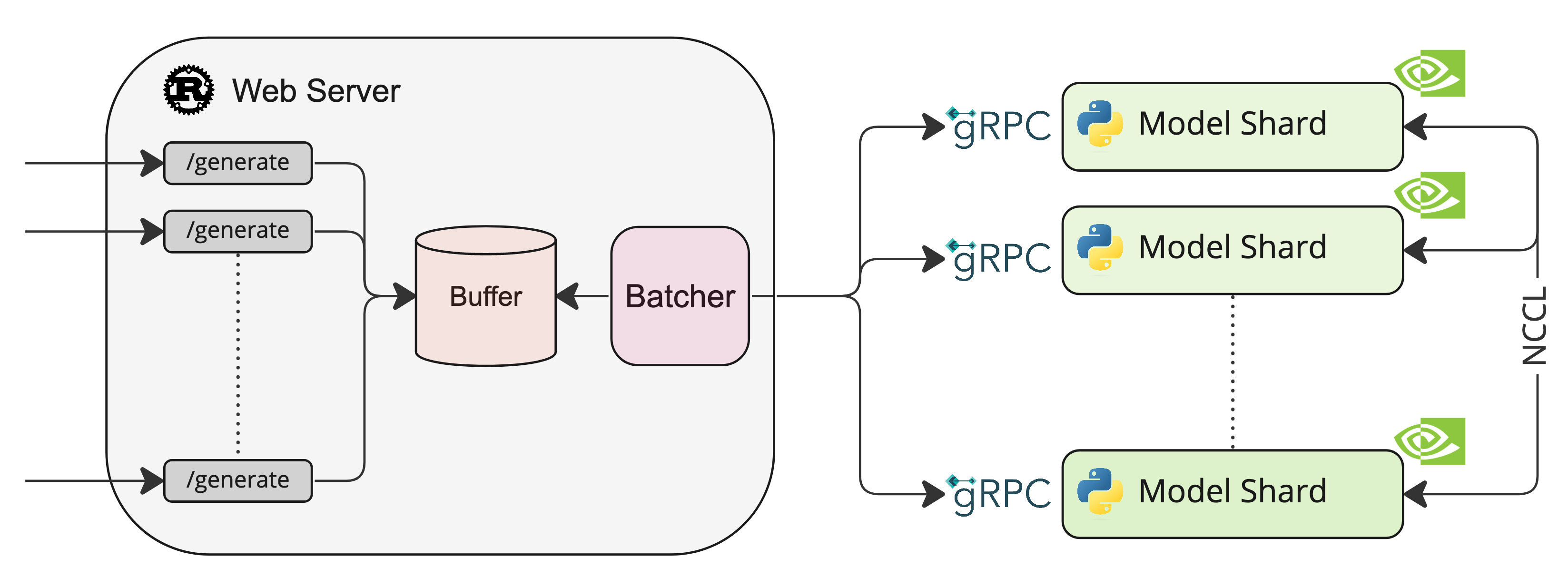

A Rust, Python and gRPC server for text generation inference. Used in production at HuggingFace to power LLMs api-inference widgets.

Table of contents

Features

- Token streaming using Server Side Events (SSE)

- Dynamic batching of incoming requests for increased total throughput

- Quantization with bitsandbytes

- Safetensors weight loading

- 45ms per token generation for BLOOM with 8xA100 80GB

- Logits warpers (temperature scaling, topk, repetition penalty ...)

- Stop sequences

- Log probabilities

Officially supported models

- BLOOM

- BLOOMZ

- MT0-XXL

Galactica(deactivated)- SantaCoder

- GPT-Neox 20B: use

--revision pr/13

Other models are supported on a best effort basis using:

AutoModelForCausalLM.from_pretrained(<model>, device_map="auto")

or

AutoModelForSeq2SeqLM.from_pretrained(<model>, device_map="auto")

Get started

Docker

The easiest way of getting started is using the official Docker container:

model=bigscience/bloom-560m

num_shard=2

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

docker run --gpus all -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:latest --model-id $model --num-shard $num_shard

You can then query the model using either the /generate or /generate_stream routes:

curl 127.0.0.1:8080/generate \

-X POST \

-d '{"inputs":"Testing API","parameters":{"max_new_tokens":9}}' \

-H 'Content-Type: application/json'

curl 127.0.0.1:8080/generate_stream \

-X POST \

-d '{"inputs":"Testing API","parameters":{"max_new_tokens":9}}' \

-H 'Content-Type: application/json'

To use GPUs, you will need to install the NVIDIA Container Toolkit.

API documentation

You can consult the OpenAPI documentation of the text-generation-inference REST API using the /docs route.

The Swagger UI is also available at: https://huggingface.github.io/text-generation-inference.

Local install

You can also opt to install text-generation-inference locally. You will need to have cargo and Python installed on your

machine

BUILD_EXTENSIONS=True make install # Install repository and HF/transformer fork with CUDA kernels

make run-bloom-560m

CUDA Kernels

The custom CUDA kernels are only tested on NVIDIA A100s. If you have any installation or runtime issues, you can remove

the kernels by using the BUILD_EXTENSIONS=False environment variable.

Be aware that the official Docker image has them enabled by default.

Run BLOOM

Download

First you need to download the weights:

make download-bloom

Run

make run-bloom # Requires 8xA100 80GB

Quantization

You can also quantize the weights with bitsandbytes to reduce the VRAM requirement:

make run-bloom-quantize # Requires 8xA100 40GB

Develop

make server-dev

make router-dev

Testing

make python-tests

make integration-tests