2.6 KiB

2.6 KiB

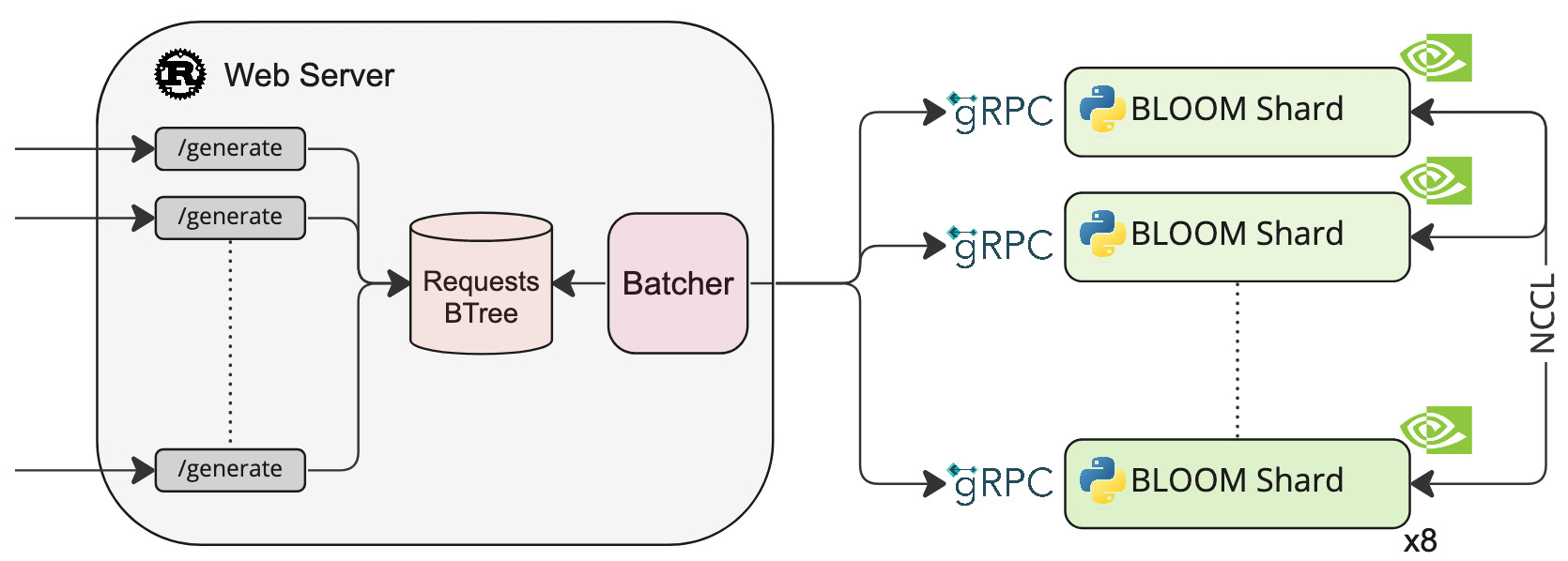

Text Generation Inference

A Rust and gRPC server for text generation inference. Used in production at HuggingFace to power Bloom, BloomZ and MT0-XXL api-inference widgets.

Features

- Dynamic batching of incoming requests for increased total throughput

- Quantization with bitsandbytes

- Safetensors weight loading

- 45ms per token generation for BLOOM with 8xA100 80GB

- Logits warpers (temperature scaling, topk ...)

- Stop sequences

- Log probabilities

Officially supported models

- BLOOM

- BLOOMZ

- MT0-XXL

Galactica(deactivated)- SantaCoder

- GPT-Neox 20B: use

--revision refs/pr/13

Other models are supported on a best effort basis using:

AutoModelForCausalLM.from_pretrained(<model>, device_map="auto")

or

AutoModelForSeq2SeqLM.from_pretrained(<model>, device_map="auto")

Load Tests for BLOOM

See k6/load_test.js

| avg | min | med | max | p(90) | p(95) | RPS | |

|---|---|---|---|---|---|---|---|

| Original code | 8.9s | 1s | 9.12s | 16.69s | 13.7s | 14.26s | 5.9 |

| New batching logic | 5.44s | 959.53ms | 5.28s | 13.12s | 7.78s | 8.92s | 9.08 |

Install

make install

Run

BLOOM 560-m

make run-bloom-560m

BLOOM

First you need to download the weights:

make download-bloom

make run-bloom # Requires 8xA100 80GB

You can also quantize the weights with bitsandbytes to reduce the VRAM requirement:

make run-bloom-quantize # Requires 8xA100 40GB

Test

curl 127.0.0.1:3000/generate \

-v \

-X POST \

-d '{"inputs":"Testing API","parameters":{"max_new_tokens":9}}' \

-H 'Content-Type: application/json'

Develop

make server-dev

make router-dev