# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

# What does this PR do?

```

text-generation-launcher --model-id XXX # Uses cuda graphs by default

text-generation-launcher --model-id XXX --cuda-graphs "1,2" #Restrict the number of cuda graphs which saves VRAM

text-generation-launcher --model-id XXX --cuda-graphs "0" # Disabling it entirely

```

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

This PR correctly handles batches with a mixture of constrained and non

constrained generations.

Currently if batch contains mixed generations the generation will throw

an error because it will incorrectly attempt to constrain a request with

an empty grammar.

We now handled `None` grammars and only apply the mask if needed

Fixes:

https://github.com/huggingface/text-generation-inference/issues/1643

# What does this PR do?

A few cases where you're using a mistral structure or mixtral structure

but not a llama tokenizer, why not make it to call the AutoTokenizer in

exception handling.

Similar PR #619

@Narsil

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

This PR resolves a couple

- [X] adjusts the tool response to align with openai's tools response

type

- [X] bumps pydantic to `2.6.4` in all apps (resolves dependency issue

when running tests)

- [X] bump `outlines` version and fix import for new name

This PR adds `force_downcast_after` to `FastRMSNorm.forward` which is

used in the Gemma model. References

https://github.com/huggingface/transformers/pull/29402 and

https://github.com/huggingface/transformers/pull/29729

Setting `force_downcast_after=True` will perform the `hidden_states *

weight` multiplication in f32 and then downcast to half. This differs

slightly from the current implementation which first casts the

`hidden_states` to a half and then multiples.

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

This PR fixes parallel grammar requests, currently grammar states are

not concatenated correctly when a new request is added to the batch and

this results in incorrect generation. This PR updates the `concatenate`

function to correctly include the previous states.

fixes: #1601

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

Using a single `os.getenv` statement instead of multiple.

Should make truthful values easier to catch

In the end didn't move towards full CLI because modifying globals in

Python is error prone (depends on code import order).

Added an error when mamba is launched with TP.

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

- Move float16 to bfloat16, which has less imprecisions (load test are

failing with the update kernels + f16, all working under bf16).

Another note, is that we are not respecting the layer norm in f32

defined in the configuration (this is OK in my book, but that could

impact the f16 precision)

- Moved to update kernels. Triton overhead is super high, removed by

switching to cuda graphs works great (update cuda graph is available

in TRT-LLM if needed, seems *exactly* like the regular ssm kernel.

- Moved inference_params struct in order to make only 2 tensors, to

reduce the overhead of copying back and forth to the cuda graphs.

- Left over overhead seems entirely in the tokenization bit. (Still 4

copies are paid before launching the graph)

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

This PR adds the possibility to run AWQ models with Exllama/GPTQ

kernels, specifically for ROCm devices that support Exllama kernels but

not AWQ's GEMM.

This is done by :

- un-packing, reordering and re-packing AWQ weights when `--quantize

gptq` but the model's `quant_method=awq`.

- avoiding overflows when adding 1 to zeros in exllama and triton.

Ref: https://github.com/casper-hansen/AutoAWQ/pull/313

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

---------

Co-authored-by: Nicolas Patry <patry.nicolas@protonmail.com>

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [x] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

@OlivierDehaene OR @Narsil

This draft PR is a work in progress implementation of the mamba model.

This PR currently loads weights, and produces correct logits after a

single pass.

This PR still needs to correctly integrate this model so it produces

tokens as expected, and apply optimization to avoid all copies during

runtime/unnecessary operations.

#### Helpful resources

[Mamba: Linear-Time Sequence Modeling with Selective State Spaces

(Albert Gu and Tri Dao)](https://arxiv.org/abs/2312.00752)

https://github.com/johnma2006/mamba-minimalhttps://github.com/huggingface/candle/blob/main/candle-examples/examples/mamba-minimal/model.rshttps://github.com/huggingface/transformers/pull/28094

Notes: this dev work is currently targeting `state-spaces/mamba-130m`,

so if you want to test please use that model. Additionally when starting

the router the prefill needs to be limited: `cargo run --

--max-batch-prefill-tokens 768 --max-input-length 768`

## Update / Current State

Integration tests have been added and basic functionality such as model

loading is supported.

```bash

cd integration-tests

pytest -vv models/test_fused_kernel_mamba.py

```

- [x] add tests

- [x] load model

- [x] make simple request

- [ ] resolve warmup issue

- [ ] resolve output issues

fetching models tested during dev

```bash

text-generation-server download-weights state-spaces/mamba-130m

text-generation-server download-weights state-spaces/mamba-1.4b

text-generation-server download-weights state-spaces/mamba-2.8b

```

The server can be run

```bash

cd server

MASTER_ADDR=127.0.0.1 MASTER_PORT=5555 python text_generation_server/cli.py serve state-spaces/mamba-2.8b

```

router

```bash

cargo run

```

make a request

```bash

curl -s localhost:3000/generate \

-X POST \

-d '{"inputs":"What is Deep Learning?","parameters":{"max_new_tokens":20}}' \

-H 'Content-Type: application/json' | jq

```

response

```json

{

"generated_text": "\n\nDeep learning is a machine learning technique that uses a deep neural network to learn from data."

}

```

---------

Co-authored-by: Nicolas Patry <patry.nicolas@protonmail.com>

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

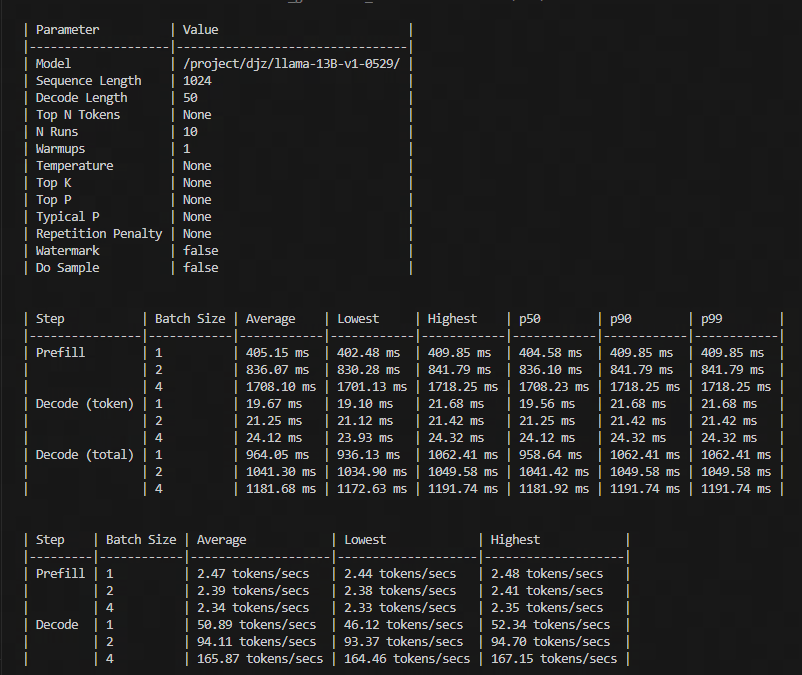

Add TensorRT-LLM weight-only GEMV kernel support. We extract GEMV kernel

from

[TensorRT-LLM](https://github.com/NVIDIA/TensorRT-LLM/tree/main/cpp/tensorrt_llm/kernels/weightOnlyBatchedGemv)

to accelerate the decode speed of EETQ when batch_size is smaller or

equal to 4.

- Features

1. There is almost no loss of quantization accuracy.

2. The speed of decoding is 13% - 27% faster than original EETQ which

utilizes GEMM kernel.

- Test

Below is our test on 3090. Environment: torch=2.0.1, cuda=11.8, nvidia

driver: 525.78.01

prompt=1024, max_new_tokens=50

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

# What does this PR do?

Superseeds #1459

The fix works as follows.

We updated next_token_chooser to return all logprbs, then

batch_top_n_tokens, now also gets accepted_ids + speculated_length (so

it knows how to interpret the flat logprobs).

We then update the code to return lists ot `Tokens` that it expects.

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

---------

Co-authored-by: Choon Meng Tan <choonmeng@aisingapore.org>

Co-authored-by: David Ong Tat-Wee <13075447+ongtw@users.noreply.github.com>

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

This PR adds basic modeling for phi-2

run

```bash

text-generation-server \

serve \

microsoft/phi-2 \

--revision 834565c23f9b28b96ccbeabe614dd906b6db551a

```

test

```bash

curl -s localhost:3000/generate \

-X POST \

-d '{"inputs":"What is Deep Learning?","parameters":{"max_new_tokens":20}}' \

-H 'Content-Type: application/json' | jq .

# {

# "generated_text": "\nDeep learning is a subset of machine learning that uses artificial neural networks to learn from data. These"

# }

```

notes

- recently (~1 day ago) the Phi weights and model were updated to

accommodate adding [GQA/MQA attention to the

model.](https://github.com/huggingface/transformers/pull/28163) This

impl expects the original model format so a fixed revision is required

at the moment.

- this PR only includes a basic implementation of the model and can

later be extended for support Flash and Sharded versions as well as make

use of better optimization

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Close#1418Close#1415

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->

local directory overloaded still needs the directory to locate the

weights files correctly.

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet

though.

Once merged, your PR is going to appear in the release notes with the

title you set, so make sure it's a great title that fully reflects the

extent of your awesome contribution.

Then, please replace this with a description of the change and which

issue is fixed (if applicable). Please also include relevant motivation

and context. List any dependencies (if any) that are required for this

change.

Once you're done, someone will review your PR shortly (see the section

"Who can review?" below to tag some potential reviewers). They may

suggest changes to make the code even better. If no one reviewed your PR

after a week has passed, don't hesitate to post a new comment

@-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the

other checks if that's the case).

- [ ] Did you read the [contributor

guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the

[forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes?

Here are the

[documentation

guidelines](https://github.com/huggingface/transformers/tree/main/docs),

and

[here are tips on formatting

docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have

passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the

right person to tag with @

@OlivierDehaene OR @Narsil

-->